How Measures Are Scored

The IMPACT Measures Tool® was developed to address the diverse needs of early childhood and parenting initiatives. Our team of measurement and evaluation experts uses a research-driven scoring method to examine each measure’s cost, usability, cultural relevance, and technical merit. Scores are based on information available from developer websites, technical manuals, and published peer-reviewed studies.

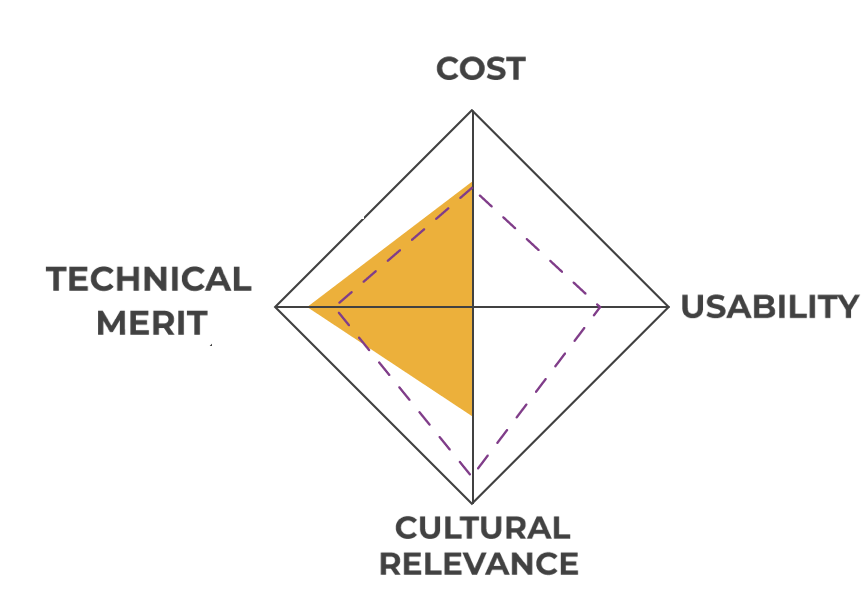

Scoring Diamond

The scoring diamond is a visual representation of how well a measure scores in each of the four key categories: cost, usability, cultural relevance, and technical merit. Each category is assigned to a specific corner of the scoring diamond. The shaded region depicts the score of a measure in relation to the maximum score of 10 possible points for each category.

IMPACT minimum score: We have highlighted measures that do not meet IMPACT’s recommended minimum score for technical merit. These measures either do not have sufficient technical merit evidence to score, or the evidence available is minimal and unsatisfactory.

You can also set your preferences for a desired score in each of the four key categories. Once you set preferences, a dotted line will display on the scoring diamond, indicating how each measure scores in comparison to your desired preferences.

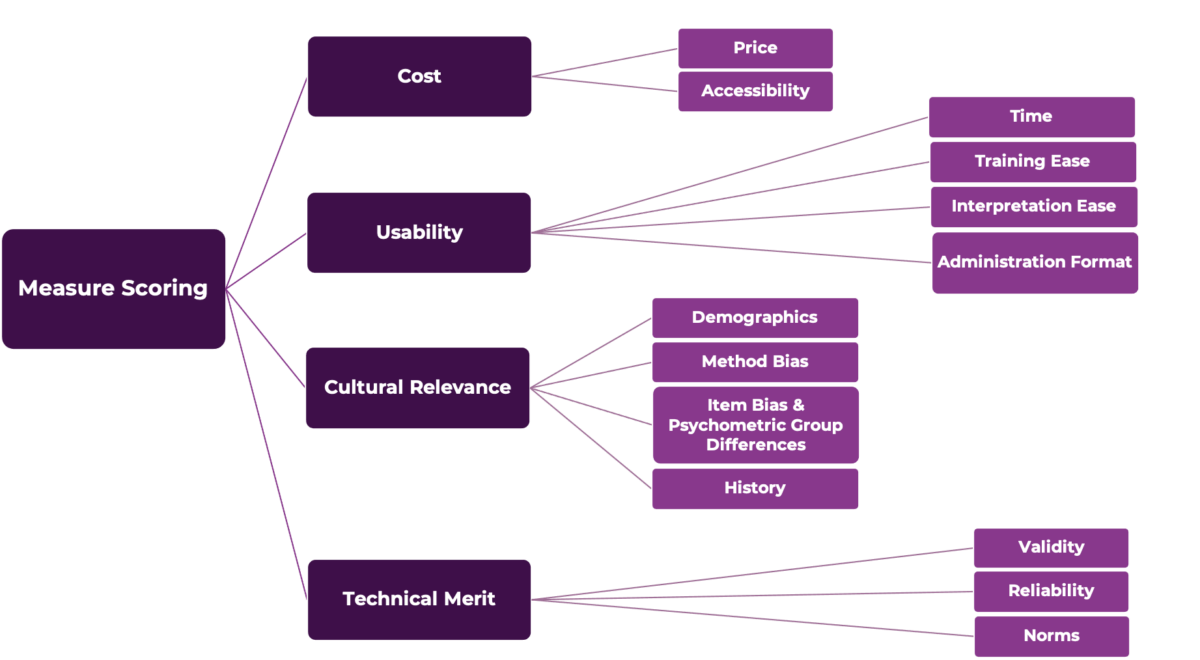

Overview of Scoring System

Four Key Categories

I. Cost (10 points): How much does this measure cost?

The cost of a measure is determined by its price and how easy it is to access. Measures that cost less and that are easier to access receive higher scores.

Price (6 points)

The IMPACT Measures Tool® includes information on both free and at-cost measures. Each measure is assigned a score based on any financial costs that are required to administer the measure. For example, a measure might require purchasing of the actual license to use the measure, additional user and/or training manuals, hard copy of administration materials, etc.

Accessibility (4 points)

Each measure is also scored on how easy it is to access, based on whether the actual measure is downloadable online and ready for use. Measures that are free but require an account or contacting the developer for access are not considered accessible in the scoring system. Measures at cost do not receive any points in this category.

II. Usability (10 points): How easy is it to use this measure?

Usability represents how easy it is to administer a measure and to interpret the results. Measures score higher if they are easier to administer and interpret.

Time (4 points)

Scores in this subcategory reflect how long it takes to administer the measure, including any setup required, delivery of materials, result calculation, and interpretation.

Training ease (3 points)

Scores are assigned based on the level of difficulty to learn to administer the measure, including any equipment, materials, and standardized language needed.

Interpretation ease (2 points)

Scores are assigned based on how easy it is to interpret the measure’s results, such as whether the measure is norm-referenced (i.e., allowing comparison to other measures), whether supplemental materials are provided, and/or how detailed the coding process is described (if applicable).

Administration Format (1 point)

Scores are assigned based on how many formats the measures can be administered in (i.e., paper and/or electronic). Measures are also scored based on whether internet access is required to administer the measure.

III. Cultural Relevance (10 points): Does this measure serve different groups?

Cultural relevance explores the extent to which measures are developed with different communities in mind, and the steps taken to prevent or address measurement bias. Measures are subject to specific scoring criteria depending on whether they are developed for use in a single country, internationally (i.e., across multiple countries), or for a specific program.

Demographics (2 points)

Scores reflect how inclusive the validation sample is for age, gender, ethnicity, socioeconomic status, linguistic diversity, geographic regions, and urbanicity.

Method Bias (2 points)

Scores in this subcategory are based on the measure’s item generation process. This includes measure authors consulting with the community that the measure was intended for and developing with a diverse population in mind.

Item Bias & Psychometric Group Differences (5 points)

Measures receive higher scores if authors conducted analyses to identify potential bias between demographic groups (e.g., men and women) at the item level and if these biases were addressed. Scores in this subcategory also reflect if analyses were conducted to identify the potential bias of the measure as a whole and if these biases were addressed. Measures get higher scores if the developers were able to statistically demonstrate that the measure was unbiased with respect to these dimensions.

History (1 point)

Our history subscore gives a measure credit if it was originally developed and/or validated in the past twenty years.

IV. Technical Merit (10 points): How accurate is this measure?

Each measure is scored based on how consistent and accurate it is, defined as Technical Merit.

Validity (4 points)

Validity indicates whether a measure accurately measures the topic that it intends to.

Scores for this subcategory are based on the measure’s stated purpose, the extent to which measure items and results represent the intended topic (such as parental stress), and the expected relationships between the measure topic, other topics, and/or individual characteristics.

For example, a measure intended to assess children’s vocabulary would be scored on validity based on whether it actually measures vocabulary skills versus other skills such as working memory or attention. For additional details and examples on types of validity, please review our Scoring Guidebook.

For screening tools, validity is assessed through sensitivity and specificity. Screening tools receive higher scores for accurately identifying individuals at risk while avoiding over-identifying individuals who are not at risk (i.e., false positives).

Reliability (5 points)

Reliability indicates how consistent the results of a measure are. There are multiple types of reliability that factor into a measure’s score. These include rating the consistency of:

- Measure’s results over time (test-retest reliability)

- Different raters of a measure (inter-rater reliability)

- Items within a measure (internal consistency)

Note: Not all types of reliability apply for every measure.

Norms (1 Point)

Measures are also reviewed for their report of means and standard deviations of scores, which allow for calculation of where scores fall in respect to the norming sample.

Measure Detail Page Definitions

Measure Type: How is the measure administered?

Options include Survey, Direct Assessment, Observation, and Interview. If the measure was designed for use as a Screening Tool, this is also noted.

Topic: What general domain is the measure designed to assess?

Topics include Caregiver-Child Interaction, Social-Emotional Development, Classroom/Childcare Quality, and more.

Publication Year: What year was this measure published?

Publication year refers to the year the measure’s original validation study or manual was published.

Age Range: What is the intended age range for this measure?

If the measure assesses children, or caregivers of children in a specific age range, the child age range is presented. If the measure was designed for use with adults, “Adult” is listed instead.

Subject: Who is this measure about?

Options include child, caregiver, teacher, family, clinician, and classroom/childcare setting. For example, if the measure assesses stress experienced by parents, the subject is “caregiver”. If the measure assesses child academic skills, the subject is “child”. Some measures are about the “family” (e.g., measures of family climate or household needs).

Respondent: Who completes the measure?

This refers to the individual who inputs the data (e.g., fills out the form on a survey, writes notes during an observation). The “respondent” may sometimes be the subject themselves (e.g., when a parent responds to a survey about their parenting practices) or may be someone other than the subject (e.g., the researcher or clinician who codes behaviors observed during a parent-child interaction).

Administrator: Who administers the measure?

Surveys typically do not require an administrator unless specifically noted. Common administrators of other types of measures include clinicians, teachers, or researchers. For example, a direct assessment of child mathematical skills may be designed to be administered by that child’s teacher.

Electronic Version: Is an electronic version of this measure available?

This refers specifically to whether the measure can be administered electronically. Measures that have electronic scoring or data tracking systems but require a person to administer the measure are not included in this category.

Time to Complete: How long does this measure take to complete?

This refers to the process of measure administration and does not include time required for scoring or interpretation of results. When information regarding time to complete cannot be found in available materials, this range is estimated based on the number of items.

NOTE: Some measures take longer than the range currently accommodated by the IMPACT website (0-120 minutes). When this is the case, time to complete is listed as 120 minutes and the true time to complete is noted in the description of the measure at the top of the page.

Cost: How is this measure accessed?

Measures are categorized either as “at cost,” “free & accessible” (i.e., when they are freely downloadable from the developer), or “free & not accessible” (i.e., when access requires creating a free online account or contacting the developer directly to request the measure).

Languages: What languages is this measure available in?

Languages listed here may or may not be validated. The versions listed in this category are those listed by the measure developer; this may not be comprehensive and other translations or validations may be available.

Did You Know: Are there other key pieces of information to know about this measure?

Although not comprehensive, this category aims to provide important caveats or guidance that users should take into account when considering selection of this measure for use (e.g., unique information about access).

References: Where did IMPACT collect information about this measure?

Main sources include the measure’s official website, as well as original validation studies and manuals. APA references for original validation studies or manuals are provided in this category.

More Information

Looking for more in-depth information about our scoring system? View our Scoring Guidebook and our Evidence Guide.

Measurement and Evaluation Services Consultation

Looking for support with research design, implementation, or evaluation?

Request a free consultation call with our team to discuss our measurement and evaluation services.

Contact Us

We are committed to supporting early childhood programs. Have feedback or questions? Contact us or visit our FAQ.

Email: ecprism@instituteforchildsuccess.org